I spent hours with

WebIOPi framework today and decided to keep this note as a future reference.

Few days back I decided to create a RPi media center where I can play media files available within any device connected to my home network. I used WebIOPi to control GPIO and communicate with my RPi back and forth remotely from my mobile device.

How to install WebIOPi

You need python and make sure to install python before moving forward.

i) You can directly download install from the pi store.

ii) I took the second method, Downloaded WebIOPi from

sourceforge and execute,

tar xvzf WebIOPi-0.7.1.tar.gz

cd WebIOPi-0.7.1

sudo ./setup.sh

webiopi-0.7.1 is the latest version available at the moment.

After installing run

sudo /etc/init.d/webiopi start to start the webIOPi service.

Let's do some basic stuff to see our installation progress. If you haven't specify the port number webIOPi service will start on the port 8000. So let's find the IP address of the RPi and browse, http://192.168.1.104:8000. Provide

webiopi as the username and

raspberry as the password.

Click on the GPIO Header link and browse, So i'm using Raspberry Pi B+ and this is the GPIO pin layout.

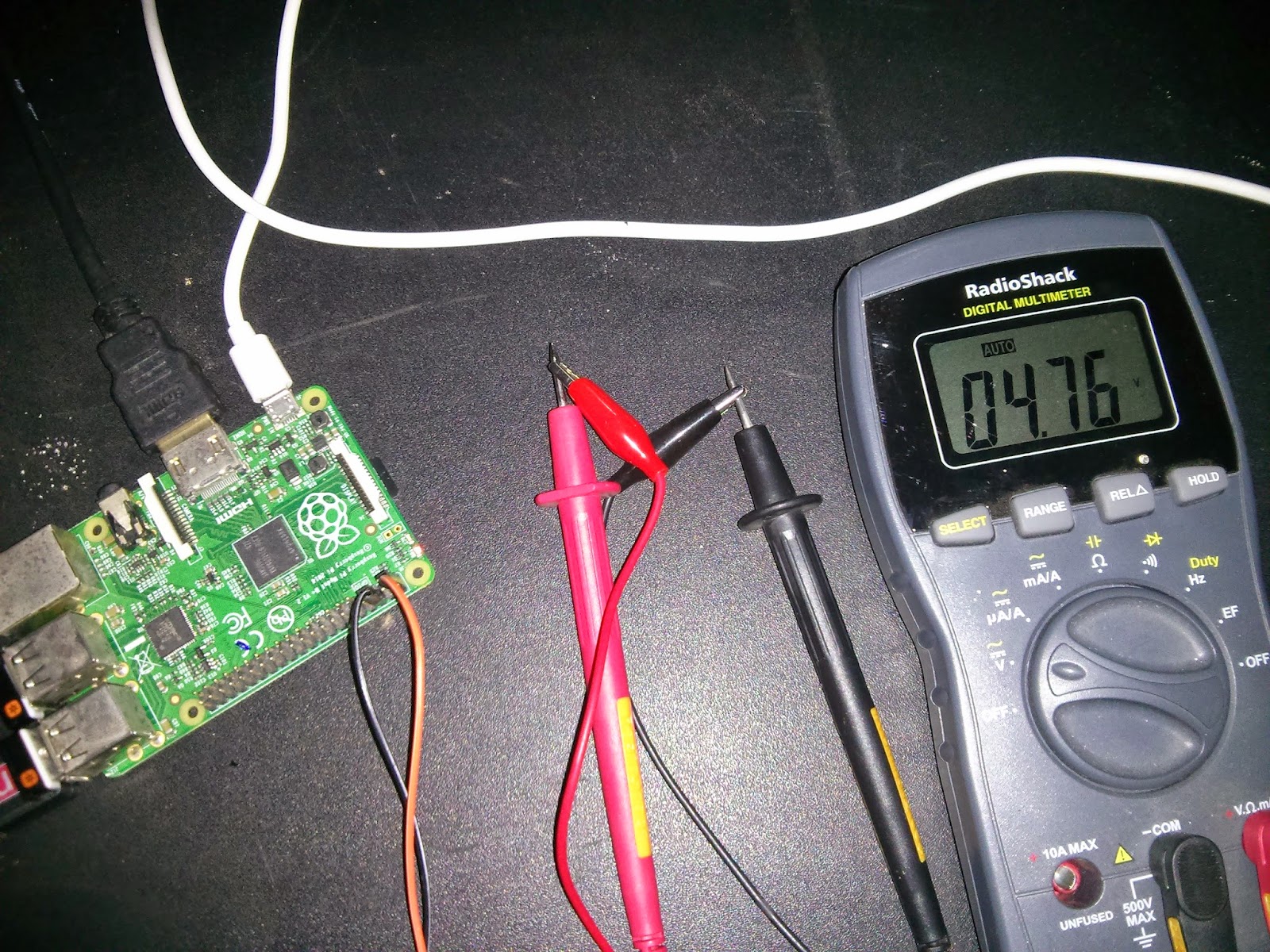

Let's use 1, 2, 6 PINs and verify this voltage values. I'm going to use a multimeter, two crocodile probes and two jumper cables for this.

Connect negative end to the ground PIN(6) and positive end to the PIN #1. Following is my voltage reading.

Disconnect the positive end from PIN 1 and connect to PIN #2,

There is a slight difference in the voltage reading, this can be due to the fact that I'm using wireless network adapter, mouse, keyboard attached to my RPi.

Let's toggle the WebIOPi OUT/IN button to change the GPIO direction/pin to change the output state and check multimeter reading one more time. For this I'm going to use PIN #12 so connected positive probe to the PIN #12. Then switched GPIO direction ans outout status like this.

This is my multimeter reading,

Great! Now I can control my Raspberry Pi's GPIO remotely. But here I accessed WebIOPi interface over the local network. I will put a note on "How to access WebIOPi interface over internet" very soon.